Multi-output Gaussian process regression

December 2, 2020 — November 26, 2021

Multi-task learning in GP regression by assuming the model is distributed as a multivariate Gaussian process.

WARNING: Under heavy construction ATM; and makes no sense.

My favourite introduction is by Eric Perim, Wessel Bruinsma, and Will Tebbutt, in a series of blog posts spun off a paper (Bruinsma et al. 2020) which attempt to unify various approaches to defining vector GP processes, and thereby derive an efficient method incorporating good features of all of them. A unifying approach feels necessary; there is a lot of terminology going on.

- Gaussian Processes: from one to many outputs

- Scaling multi-output Gaussian process models with exact inference

- Implementing a scalable multi-output GP model with exact inference

Now that I have this tool I am going to summarise it for myself to get a better understanding. It will probably supplant some of the older material below, and maybe also some of the GP factoring material.

To define in their terms: Co-regionalization…

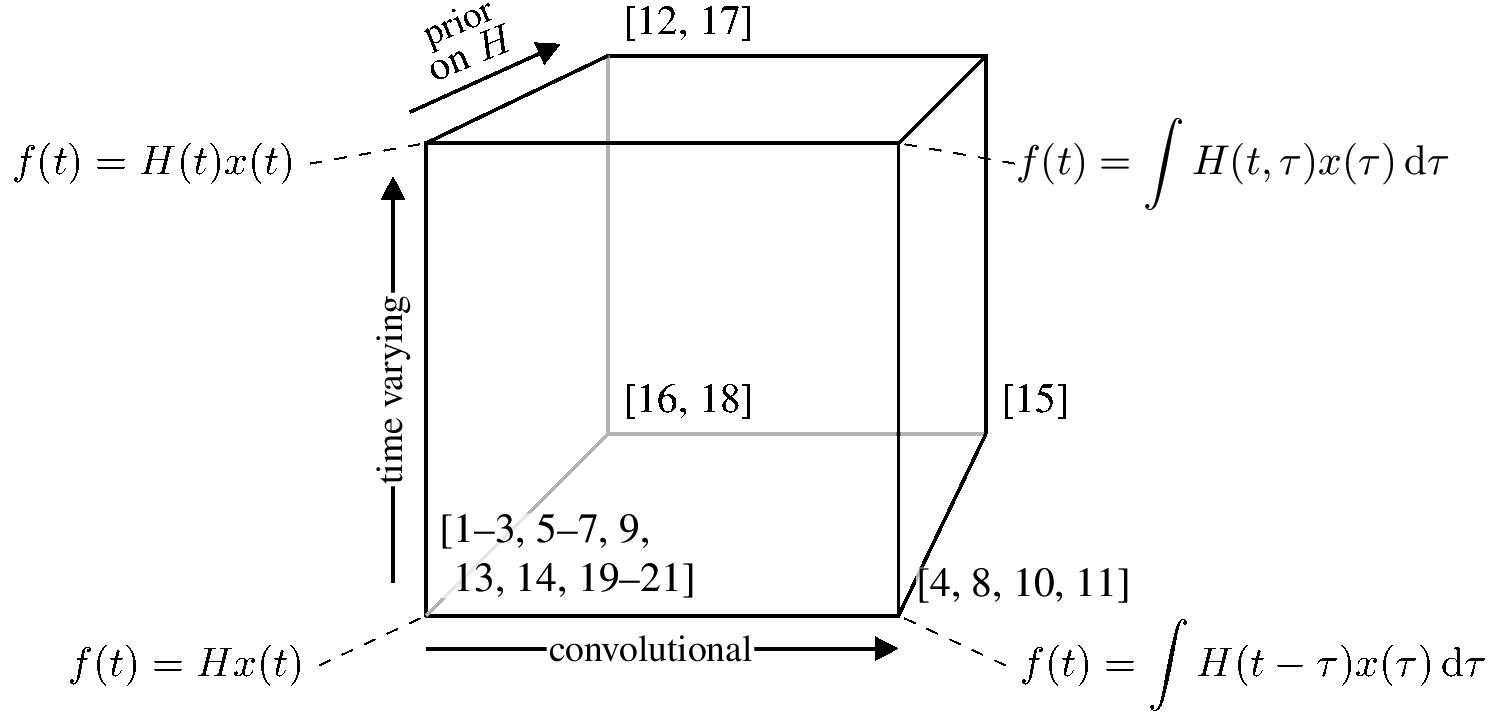

The essential insight is that in practice we probably assume a low-rank structure (to be defined) for the cross-covariance matrix, which will mean that it is some kind of linear mixing of scalar GPs. And there are only so many ways that can be done, as summarised in their diagram:

We could of course assume non-linear mixing, but then we are doing some other things, perhaps variational autoencoding or Deep GPs.

1 Tooling

Most of the GP toolkits do multi-output as well.

Here is one with some interesting documentation.

- GAMES-UChile/mogptk: Multi-Output Gaussian Process Toolkit

- mogptk/00_Quick_Start.ipynb at master · GAMES-UChile/mogptk

This repository provides a toolkit to perform multi-output GP regression with kernels that are designed to utilize correlation information among channels in order to better model signals. The toolkit is mainly targeted to time-series, and includes plotting functions for the case of single input with multiple outputs (time series with several channels).

The main kernel corresponds to Multi Output Spectral Mixture Kernel, which correlates every pair of data points (irrespective of their channel of origin) to model the signals. This kernel is specified in detail in Parra and Tobar (2017).