Deep Gaussian process regression

May 13, 2021 — May 13, 2021

Gaussian

generative

Hilbert space

kernel tricks

regression

spatial

stochastic processes

time series

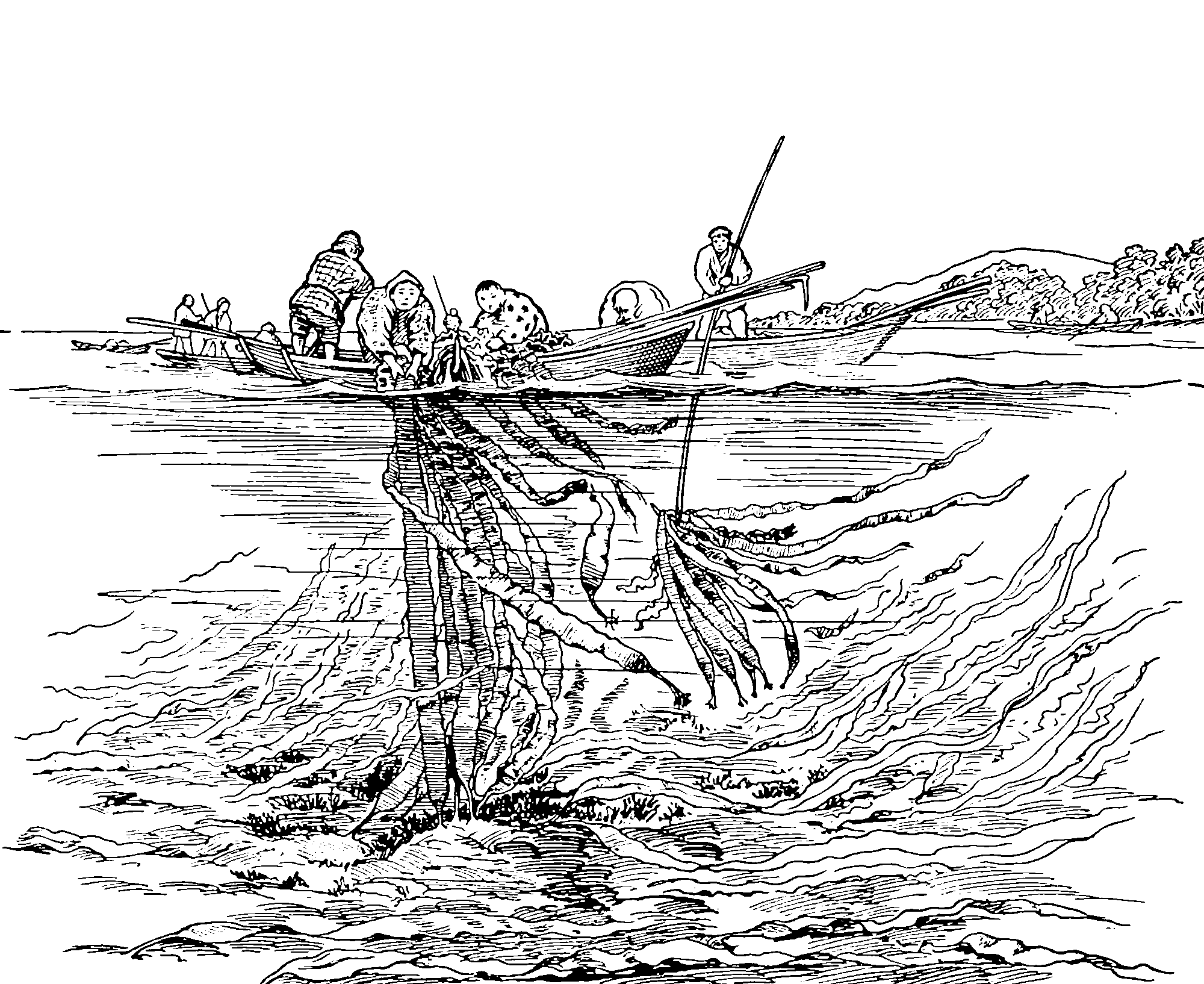

Gaussian process layer cake.

1 Platonic ideal

TBD.

2 Approximation with dropout

See NN ensembles.

3 References

Cutajar, Bonilla, Michiardi, et al. 2017. “Random Feature Expansions for Deep Gaussian Processes.” In PMLR.

Damianou, and Lawrence. 2013. “Deep Gaussian Processes.” In Artificial Intelligence and Statistics.

Domingos. 2020. “Every Model Learned by Gradient Descent Is Approximately a Kernel Machine.” arXiv:2012.00152 [Cs, Stat].

Dunlop, Girolami, Stuart, et al. 2018. “How Deep Are Deep Gaussian Processes?” Journal of Machine Learning Research.

Dutordoir, Hensman, van der Wilk, et al. 2021. “Deep Neural Networks as Point Estimates for Deep Gaussian Processes.” In arXiv:2105.04504 [Cs, Stat].

Gal, and Ghahramani. 2015. “Dropout as a Bayesian Approximation: Representing Model Uncertainty in Deep Learning.” In Proceedings of the 33rd International Conference on Machine Learning (ICML-16).

———. 2016. “A Theoretically Grounded Application of Dropout in Recurrent Neural Networks.” In arXiv:1512.05287 [Stat].

Jankowiak, Pleiss, and Gardner. 2020. “Deep Sigma Point Processes.” In Conference on Uncertainty in Artificial Intelligence.

Kingma, Salimans, and Welling. 2015. “Variational Dropout and the Local Reparameterization Trick.” In Proceedings of the 28th International Conference on Neural Information Processing Systems - Volume 2. NIPS’15.

Lee, Bahri, Novak, et al. 2018. “Deep Neural Networks as Gaussian Processes.” In ICLR.

Leibfried, Dutordoir, John, et al. 2022. “A Tutorial on Sparse Gaussian Processes and Variational Inference.”

Mattos, Dai, Damianou, et al. 2017. “Deep Recurrent Gaussian Processes for Outlier-Robust System Identification.” Journal of Process Control, DYCOPS-CAB 2016,.

Molchanov, Ashukha, and Vetrov. 2017. “Variational Dropout Sparsifies Deep Neural Networks.” In Proceedings of ICML.

Noack, Luo, and Risser. 2023. “A Unifying Perspective on Non-Stationary Kernels for Deeper Gaussian Processes.”

Ritter, Kukla, Zhang, et al. 2021. “Sparse Uncertainty Representation in Deep Learning with Inducing Weights.” arXiv:2105.14594 [Cs, Stat].

Salimbeni, and Deisenroth. 2017. “Doubly Stochastic Variational Inference for Deep Gaussian Processes.” In Advances In Neural Information Processing Systems.