Science; Institution design for

“Scientist, falsify thyself”. Peer review, academic incentives, credentials, evidence and funding…

May 17, 2020 — August 7, 2023

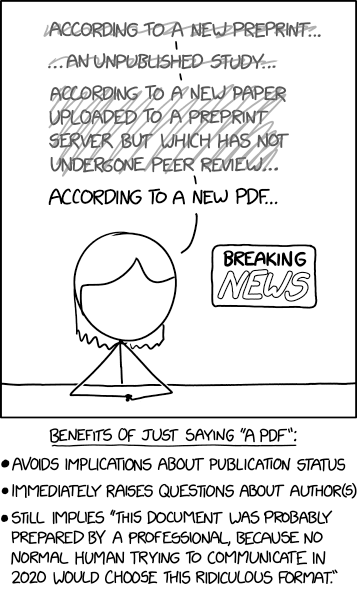

Upon the thing that I presume academic publishing is supposed to do: further science. Reputation system and other mechanisms for trust in science, a.k.a. our collective knowledge of reality itself.

I would like to consider the system of peer review, networking, conferencing, publishing and acclaim and see how closely it approximates an ideal system for uncovering truth, and further, imagine how we could make a better system. But I do not do that right now, I just collect some provocative links to that theme, in hope of time for more thought later.

1 Open review processes, practical

pubpeer (who are behind peeriodicals) produces a peer-review overlay for web browsers to spread their commentary and peer critique more widely. The site is itself brusquely confusing, but well blogged; you’ll get the idea. They are not afraid of invective, and I thought they looked more amateurish than effective. But I was wrong; they are quite selective and they seem to be near the best elective peer review today.🎶 This system been implicated in topical high-profile retractions (e.g. 1 2.

- Related question: How do we discover research to peer review?

2 Mathematical models of the reviewing process

e.g. Cole, Jr, and Simon (1981);Lindsey (1988);Ragone et al. (2013);Nihar B. Shah et al. (2016);Whitehurst (1984).

The experimental data from Neurips experiments might be useful: See e.g. Nihar B. Shah et al. (2016) or a blog post on the 2014 experiment (1, 2).

3 Economics of publishing

See academic publishing.

4 Mechanism design for peer review process

There is some fun mechanism design in this, e.g. Charlin and Zemel (2013);Gasparyan et al. (2015);Jan (n.d.);Merrifield and Saari (2009);Solomon (2007);Xiao, Dörfler, and van der Schaar (2014);Xu, Zhao, and Shi (n.d.).

An interesting edge case in peer review and scientific reputation. Adam Becker, Junk Science or the Real Thing? ‘Inference’ Publishes Both. As far as I’m concerned, publishing crap in itself is not a catastrophic. A process that fails to discourage crap by eventually identifying it would be bad.

5 How well does academia gatekeep?

Baldwin (2018):

This essay traces the history of refereeing at specialist scientific journals and at funding bodies and shows that it was only in the late twentieth century that peer review came to be seen as a process central to scientific practice. Throughout the nineteenth century and into much of the twentieth, external referee reports were considered an optional part of journal editing or grant making. The idea that refereeing is a requirement for scientific legitimacy seems to have arisen first in the Cold War United States. In the 1970s, in the wake of a series of attacks on scientific funding, American scientists faced a dilemma: there was increasing pressure for science to be accountable to those who funded it, but scientists wanted to ensure their continuing influence over fundingonline decisions. Scientists and their supporters cast expert refereeing—or “peer review,” as it was increasingly called—as the crucial process that ensured the credibility of science as a whole. Taking funding decisions out of expert hands, they argued, would be a corruption of science itself. This public elevation of peer review both reinforced and spread the belief that only peer-reviewed science was scientifically legitimate.

Thomas Basbøll says

It is commonplace today to talk about “knowledge production” and the university as a site of innovation. But the institution was never designed to “produce” something nor even to be especially innovative. Its function was to conserve what we know. It just happens to be in the nature of knowledge that it cannot be conserved if it does not grow.

Andrew Marzoni, Academia is a cult. Adam Becker on the assumptions and pathologies revealed by Wolfram’s latest branding and positioning:

So why did Wolfram announce his ideas this way? Why not go the traditional route? “I don’t really believe in anonymous peer review,” he says. “I think it’s corrupt. It’s all a giant story of somewhat corrupt gaming, I would say. I think it’s sort of inevitable that happens with these very large systems. It’s a pity.”

So what are Wolfram’s goals? He says he wants the attention and feedback of the physics community. But his unconventional approach—soliciting public comments on an exceedingly long paper—almost ensures it shall remain obscure. Wolfram says he wants physicists’ respect. The ones consulted for this story said gaining it would require him to recognize and engage with the prior work of others in the scientific community.

And when provided with some of the responses from other physicists regarding his work, Wolfram is singularly unenthused. “I’m disappointed by the naivete of the questions that you’re communicating,” he grumbles. “I deserve better.”

6 Style guide for reviews and rebuttals

See scientific writing.

7 Reformers

8 Incoming

Matthew Feeney, Markets in fact-checking

Saloni Dattani, Real peer review has never been tried

Matt Clancy, What does peer review know?

Adam Mastroianni, The rise and fall of peer review

Étienne Fortier-Dubois, Why Is ‘Nature’ Prestigious?

Science and the Dumpster Fire | Elements of Evolutionary Anthropology

F1000Research | Open Access Publishing Platform | Beyond a Research Journal

F1000Research is an Open Research publishing platform for scientists, scholars and clinicians offering rapid publication of articles and other research outputs without editorial bias. All articles benefit from transparent peer review and editorial guidance on making all source data openly available.

Reviewing is a Contract - Rieck on the social expectations of reviewing and chairing.

Andrew Gelman in conversation with Noah Smith

Anyway, one other thing I wanted to get your thoughts on was the publication system and the quality of published research. The replication crisis and other skeptical reviews of empirical work have got lots of people thinking about ways to systematically improve the quality of what gets published in journals. Apart from things you’ve already mentioned, do you have any suggestions for doing that?

I wrote about some potential solutions in pages 19–21 of Gelman (2018) from a few years ago. But it’s hard to give more than my personal impression. As statisticians or methodologists we rake people over the coals for jumping to causal conclusions based on uncontrolled data, but when it comes to science reform, we’re all too quick to say, Do this or Do that. Fair enough: policy exists already and we shouldn’t wait on definitive evidence before moving forward to reform science publication, any more than journals waited on such evidence before growing to become what they are today. But we should just be aware of the role of theory and assumptions in making such recommendations. Eric Loken and I made this point several years ago in the context of statistics teaching (Gelman and Loken 2012), and Berna Devezer et al. published an article (Devezer et al. 2020) last year critically examining some of the assumptions that have at times been taken for granted in science reform. When talking about reform, there are so many useful directions to go, I don’t know where to start. There’s post-publication review (which, among other things, should be much more efficient than the current system for reasons discussed here, there are all sorts of things having to do with incentives and norms (for example, I’ve argued that one reason that scientists act so defensive when their work is criticized is because of how they’re trained to react to referee reports in the journal review process, and various ideas adapted to specific fields. One idea I saw recently that I liked was from the psychology researcher Gerd Gigerenzer, who wrote that we should consider stimuli in an experiment as being a sample from a population rather than thinking of them as fixed rules (Gigerenzer n.d.), which is an interesting idea in part because of its connection to issues of external validity or out-of-sample generalization that are so important when trying to make statements about the outside world. * Jocelynn Pearl proposes some fun ideas, uncluding blockchainy ones, in Time for a Change: How Scientific Publishing is Changing For The Better.