Learning from ranking, learning to predict ranking

Learning preferences, ordinal regressions etc

September 16, 2020 — November 22, 2023

Ordinal data is ordered, but the differences between items may fail to possess a magnitude, e.g.I like mangoes more than apples, but how would I quantify the difference in my preference?

Made famous by fine-tuning language models, e.g. RLHF and kin (Zhu, Jordan, and Jiao 2023; Ziegler et al. 2019).

Connection to order statistics, probably, and presumably quantile regression.

If it is not clear already, I do not know much about this topic, I just wanted to keep track of it.

1 Learning with inconsistent preferences

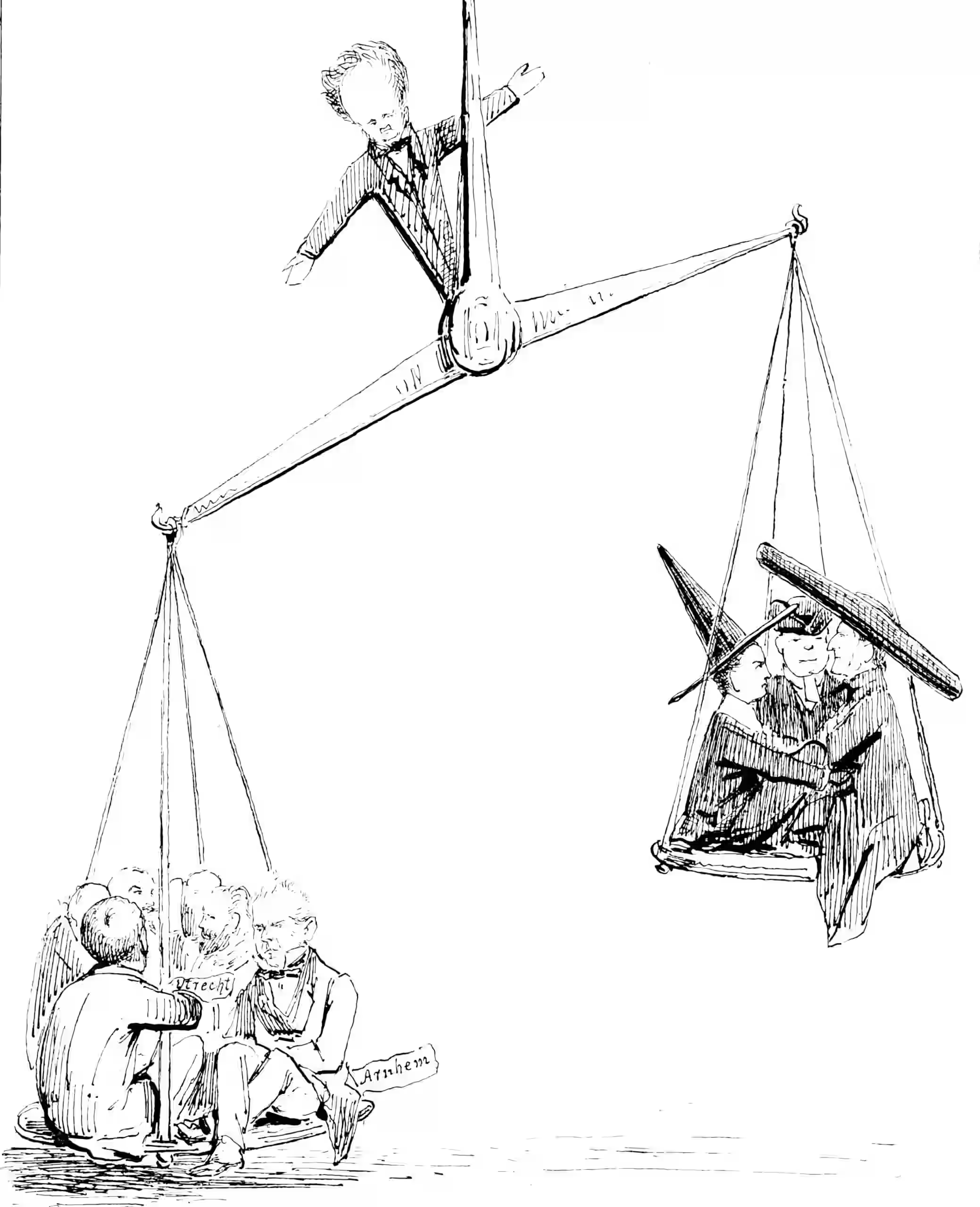

Do we need an ordinal ranking to be consistent? Arrow’s theorem might remind us of an important case in which prefernces are not consistent: when they are the preferences of many people in aggregate, i.e, when we are voting. Some algorithms make use of an analogous generalisation (Adachi et al. 2023; Chau, Gonzalez, and Sejdinovic 2022).

2 Incoming

- Bradley–Terry model

- Discrete choice models

- Ordinal regression

- OpenAI, Learning from human preferences

- Connection to contrastive estimation, which has a ranking variate