Incentive alignment problems

What is your loss function?

September 22, 2014 — September 8, 2023

Placeholder to discuss alignment problems in AI, economic mechanisms and institutions.

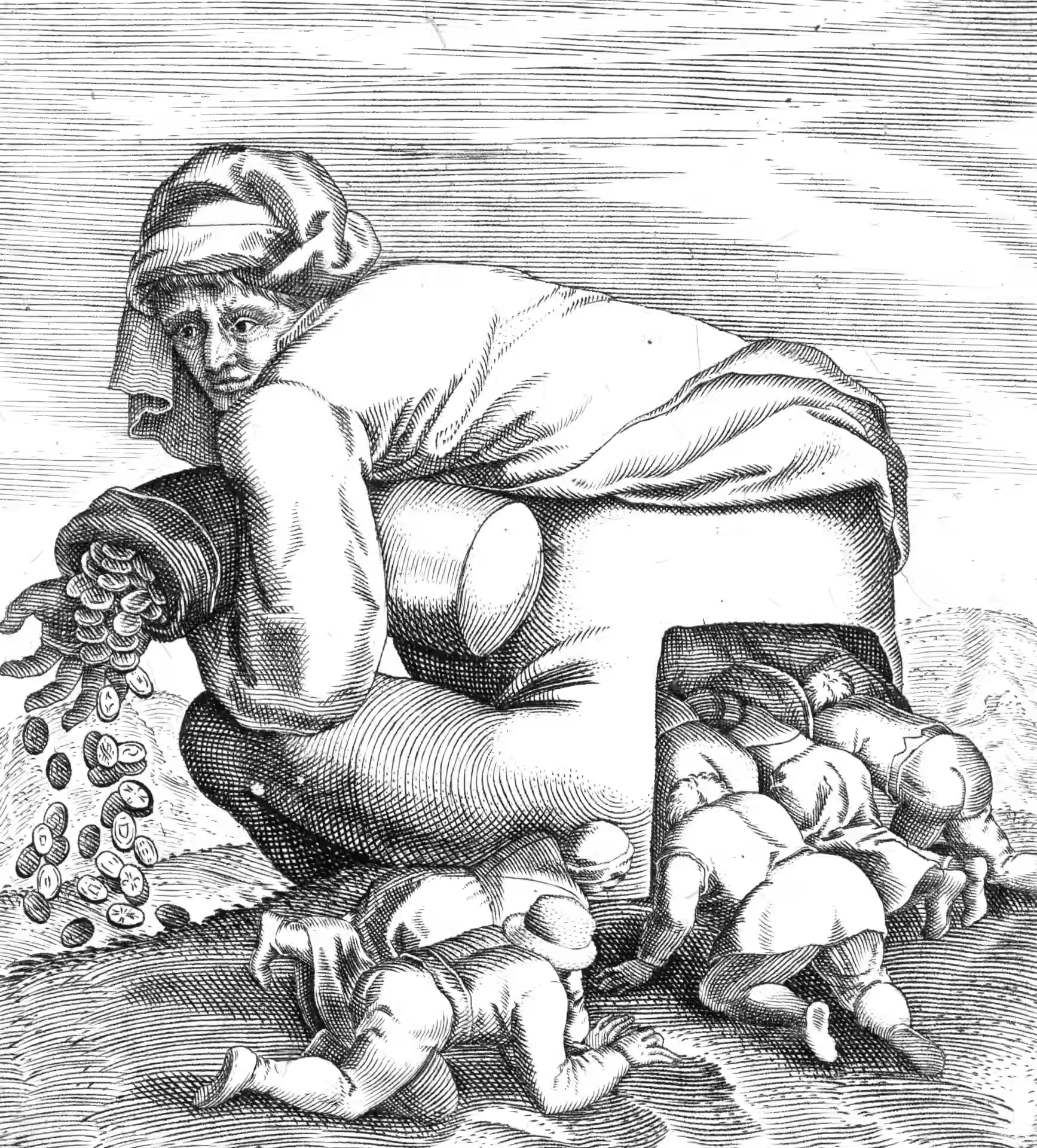

Many things to unpack. What do we imagine alignment to, when our own goals are themselves a diverse evolutionary epiphenomenon? Does everything ultimately Goodhart? Is that the origin of Moloch

1 Incoming

Billionaires? Elites? Minorities? Classes? Capitalism? Socialism? It is alignment problems all the way down.

Joe Edelman, Is Anything Worth Maximizing? How metrics shape markets, how we’re doing them wrong

Metrics are how an algorithm or an organization listens to you. If you want to listen to one person, you can just sit with them and see how they’re doing. If you want to listen to a whole city — a million people — you have to use metrics and analytics

and

What would it be like, if we could actually incentivize what we want out of life? If we incentivized lives well lived.

Google made an A.I. so woke it drove men mad - by Max Read

For me, arguing that the chatbots should not be able to simulate hateful speech is tantamount to saying we shouldn’t simulate car crashes. In my line of work, simulating things is precisely how we learn how to prevent them. Generally if something is terrible, it is very important to understand it in order to avoid it. It seems to me that understanding how hateful content arises can be understood through simulation, just as car crashes can be understood through simulation. I would like to avoid both hate and car crashes.

I am not impressed by efforts to restrict what thoughts the machines can express. I think they are terribly, fiercely, catastrophically dangerous, these robots, the furore about whether they sound mean does not seem to me to be terribly relevant to this.

Kareem Carr more rigorously describes what he thinks people imagine the machines should do, which he calls a solution. He does, IMO, articulate beautifully what is going on.

I resist calling it a solution, because I think the problem is ill defined. Equity is purpose-specific, and would have no universal solution, and in this ill-defined domain where the purpose of the models is not clear (placating pundits? fuelling twitter discourse?) there is not much specific to say about how to make a content generator equitable. That is not a criticism of his explanation, though.